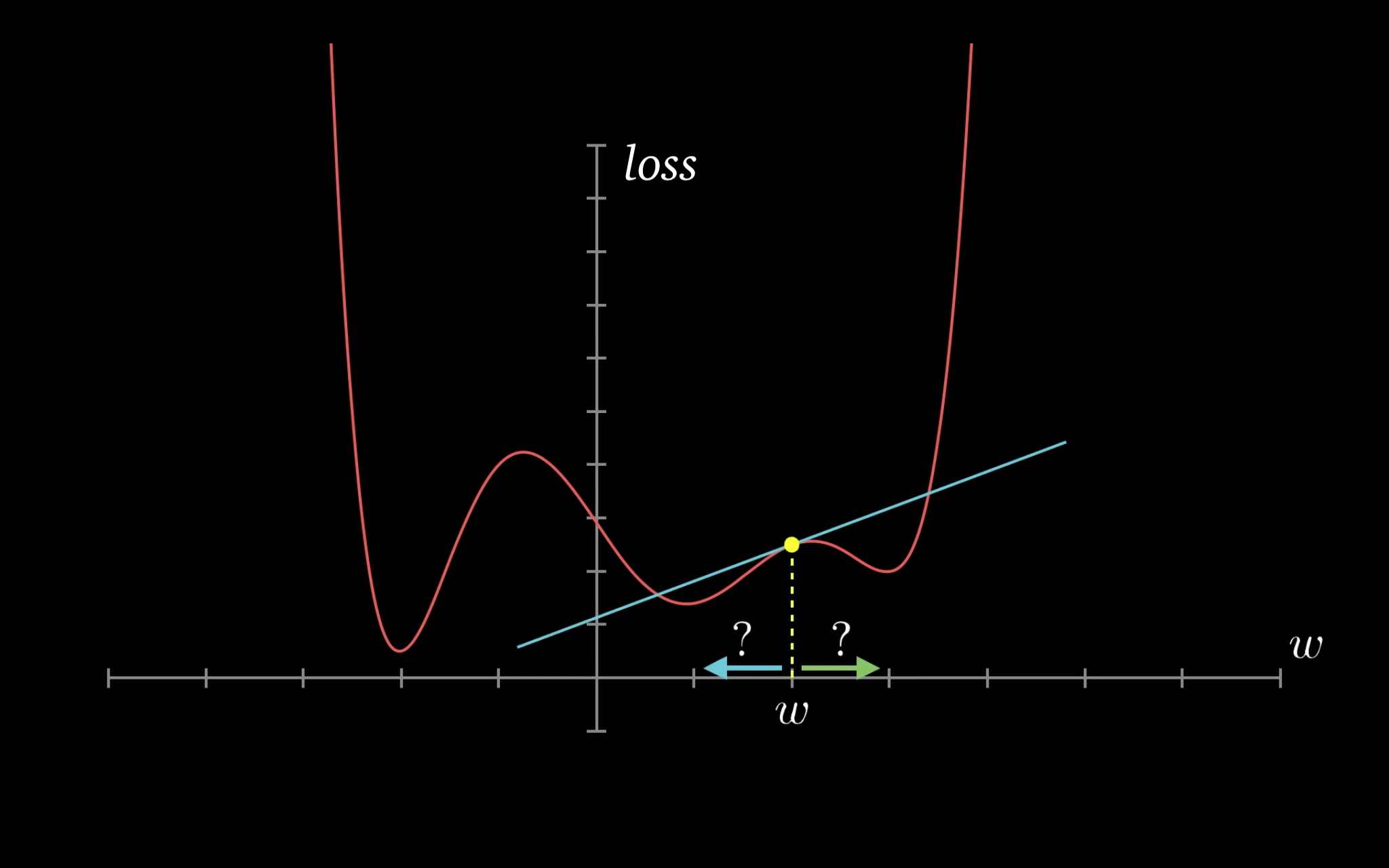

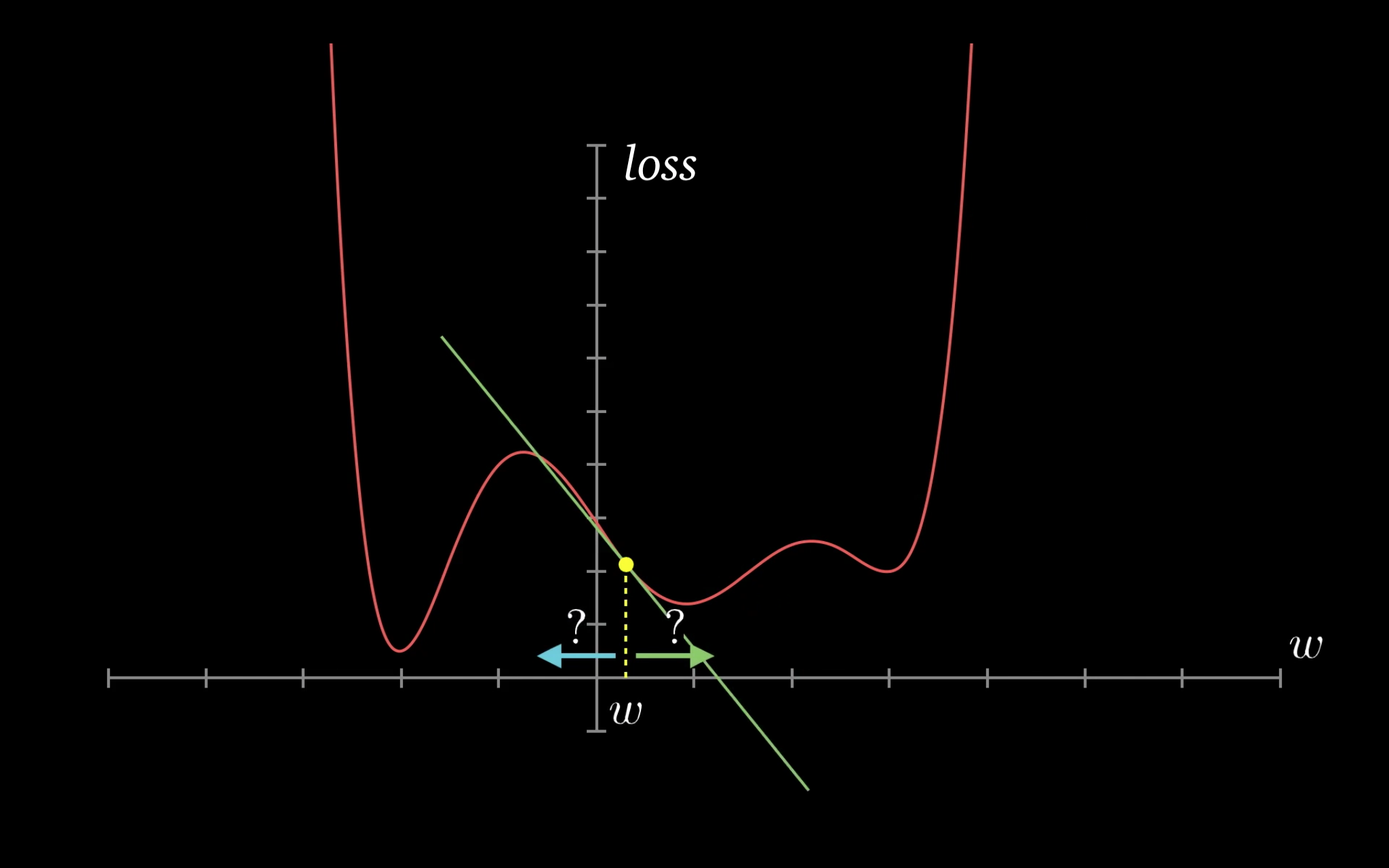

A key insight from calculus is that the gradient indicates the rate of change of the loss, or the slope of the loss function w.r.t. the weights and biases.

- If a gradient element is postive,

- increasing the element's value slightly will increase the loss.

- decreasing the element's value slightly will decrease the loss.

- If a gradient element is negative,

- increasing the element's value slightly will decrease the loss.

- decreasing the element's value slightly will increase the loss.

The increase or decrease is proportional to the value of the gradient.